Overlay visuals for live-streamed events using Unity Engine

This project benefited from research and development I conducted as part of a bursary from The Arts Council of Ireland.

The following is a brief write-up on the creative-tech side of a recent project I produced with Crash Ensemble, Ireland’s leading new music ensemble.

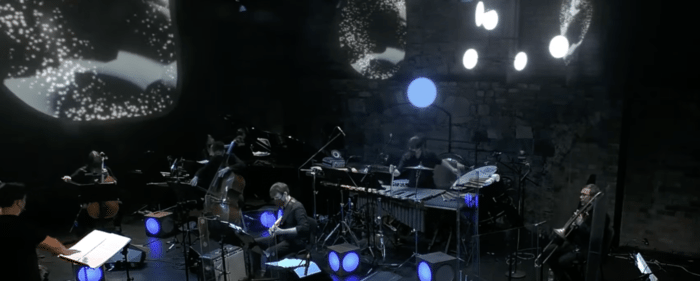

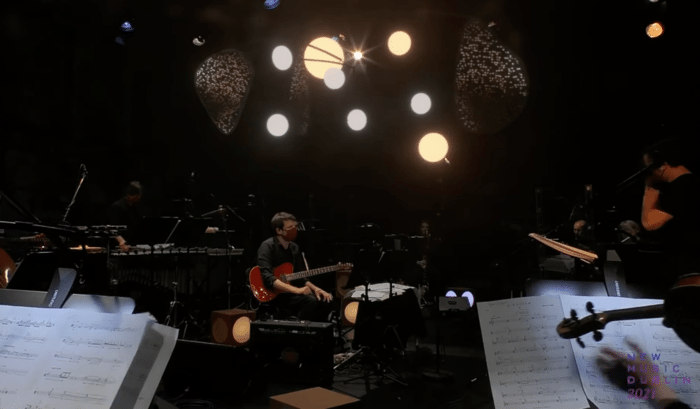

The project: Crash Ensemble were premiering a new piece - Wingform - by composer and musician Barry O'Halpin as part of New Music Dublin festival, and asked if I’d do some live visuals for it.

The catch: the show was to be live-streamed only, no live audience so no nice on-stage visuals.

Constraint: I’m generally not a fan of inter-cutting film / video art with live-performance, I like to see the musicians all the time, so I wanted to try to add some visual element that looked like it was in the space with the musicians.

Idea: my idea was to make a 3D Calder-esque mobile that would appear to be hanging above the players on the stage. The elements of the mobile would be a mix of what looked like LED video panels and light orbs. It would slowly turn as mobiles do.

Catch 2: The live stream would be multi-camera so my visuals would need to look correct for different camera angles, and cut live as the camera cut.

Making the thing #

I settled on the following approach:

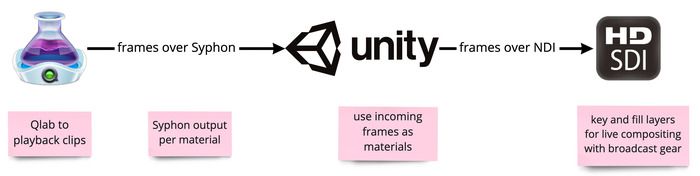

The streaming was being handled by a professional streaming production team using digital broadcast gear, therefore I would need to fit in with their workflow and technology. That meant sending them key / fill video feeds - a broadcast technique for live compositing whereby one feed is the content and the other is a greyscale feed generated from the transparency in the source, that enables a production vision mixer to overlay graphics on live camera source.

This was actually the trickiest part - finding a way to get key / fill feeds out of Unity. I got this working first so I knew I could proceed with the greater idea.

The solution here was to use the NDI plugin for Unity by Github user Keijiro - KlakNDI. They also make a great Syphon plugin for Mac that I also use in this project.

The next challenge was to get this NDI + alpha feed to physical key / fill outputs over SDI. I found NDI Outlet from Sienna - which was the only software cost in the project (€150). While this only gets 1.5 stars on the Mac App Store, it worked flawlessly for me. Note, this app is Mac only and only supports Blackmagic UltraStudio devices.

Using NDI Outlet, I was able to get key and fill video streaming from Unity to my UltraStudio Mini HD without too much hassle.

The Mobile #

I modeled UV mapped the mobile in Blender within a model of the theatre to get the scale right.

I then imported and reassembled the mobile inside Unity and setup four cameras - three for three camera angles, and one for an overhead view to send to lighting desk (more on this later)

The four camera limit was based on hardware limitations - I was running all this on a Mac Mini with an external GPU - between simultaneously using QLab, Unity, multiple Syphon feeds, NDI and NDI to Blackmagic conversion, the hardware was maxed out at four Unity cameras.

Textures #

Within Unity, I setup Syphon textures using the KlaxSyphon Unity plugin. If you aren’t familiar, Syphon is a technology for applications to share video frames through the GPU for high-performance and low-latency.

Content #

Thanks to Syphon, I could then pump in animated textures from any Syphon supporting playback application. In this case - due to time constraints - I fell back on the old favourite of QLab. While not really suited to this more VJ'ing style gig, I know it inside-out, so there’s that.

All content was procedurally generated within Blender, based on chats with composer Barry, and rendered out as seamless loops with eevee. I’d love to have done live procedural graphics, but there was enough going on.

Here’s a example clip. (Vimeo embed not rendering nicely here)

Results #

Unfortunately, Vimeo embeds aren’t rendering properly here so here’s a link to a cut of clips with the mobile. I forgot to include sound, will fix later.

The way it worked live was that the live director (cutting between cameras) and I were on radio comms, I would get a standby for a particular camera - and I would trigger the relevant virtual Unity camera from QLab (via an OSC command), then the director would cut to that camera and the correct overlay would added on top. Unfortunately, he refused to do some rehearsal (typical for these ex-rock-n-roll type dudes who have seen it all before) and some of the cuts resulted in the wrong virtual camera, or none at all! The edit above omits these!

Overall, I was really happy with the results as an experiment, and would love to build on it.

Lighting #

Finally, a ‘stretch goal’ was to semi-automate stage lighting by feeding some data from Unity to the lighting designer (SJ Shields). This was a bit half-baked as we’d no time to test etc. but sort of worked. Essentially, I sent her the overhead virtual camera feed from Unity, with heavy blur. Then she used the video feed to sample colours for the overhead lighting - this lit the stage / performers with the same hue that the mobile would have if it was actually there in the space - virtual production-ish! SJ also controlled the on-stage light-boxes (the cubes with circular cutouts) which had wireless DMX controlled RGB bulbs inside - Astera NYX bulbs.